Lately, know-how strikes quicker than ever, and the methods through which younger individuals use it evolve quickly with it. New apps, social developments, and cultural norms consistently shift younger individuals’s behaviors on-line.

To defend youngsters from sexual abuse in our linked world, it’s vital that we perceive how youngsters’s on-line behaviors could make them susceptible to these trying to do them hurt.

For this, analysis is crucial. That’s why in 2019, Thorn considerably elevated our funding in youth-centered analysis initiatives to deepen our understanding of the experiences and dangers youth face on-line. The insights we uncover are highly effective for informing our personal technique, and connecting and advancing little one security efforts worldwide. Our findings have pushed the whole lot from prevention and intervention applications to Belief & Security measures and nationwide insurance policies.

The digital age has made little one sexual abuse way more advanced, numerous, and straightforward to commit.

By shining a lightweight on the various elements that may put youngsters susceptible to sexual abuse and exploitation, our analysis helps the whole little one security ecosystem take vital motion.

Why analysis issues in a digital world

In the present day, with know-how at their fingertips, dangerous actors additionally transfer rapidly. They’ve turn out to be adept at exploiting younger individuals’s want for social connection and constructing communities on-line.

By understanding youngsters’s on-line behaviors, we are able to higher grasp how dangerous actors may exploit these developments whereas utilizing rising applied sciences, like generative AI, to their benefit. For instance, predators are already utilizing gen AI to develop solicitation, grooming, and sextortion efforts, and produce little one sexual abuse materials (CSAM) at scale.

Gaining consciousness and monitoring such developments is essential. A current surge in sextortion for monetary positive aspects is one such alarming pattern that’s concentrating on primarily teen boys and resulting in tragic outcomes. Via Thorn’s novel and companion analysis initiatives, we are able to higher perceive how, why, and to whom these devastating crimes are happening.

Our methodologies

Thorn’s analysis self-discipline was born of our revolutionary spirit. After we first got down to evaluate the prevailing information on the threats youngsters face on-line, we realized how little was really on the market. Confronted by this problem, we took up the cost ourselves, creating our personal strong analysis program.

In the present day, Thorn employs 4 full-time material specialists. Collectively, our workforce has surveyed greater than 8,000 9- to 17-year-olds, revealed groundbreaking and globally acknowledged experiences, and briefed sector stakeholders in business and legislation enforcement, in addition to the media. Simply as importantly, they’ve knowledgeable Thorn’s personal inside technique, merchandise, and applications.

We all know elevating the voices of youth and others with first-hand experiences is crucial to understanding the true nature of those points. That’s why our workforce conducts surveys and interviews with younger individuals, caregivers, legislation enforcement investigators, different little one security advocates, Belief & Security groups, and extra. Then, by analyzing advanced information units, we create holistic photos of the problems.

We hope our analysis finally results in extra data-driven interventions, a broader analysis tradition, and a extra expansive physique of insights to fight sexual harms towards youngsters.

Our analysis areas

At Thorn, we sort out a number of areas of analysis targeted on the web experiences of youth and the intersection of kid sexual abuse and know-how. These areas embody:

Youngster sexual abuse materials (CSAM): Youngster sexual abuse materials refers to sexually express content material involving a toddler. The size of CSAM on-line has elevated at staggering charges, accelerated by the digital age.

Sexting and consensual sharing: Many youth have interaction in sexting or sharing “nudes” — both consensually or nonconsensually. The dissemination of those photos poses vital dangers to the minors depicted and can be utilized for grooming or exploitation.

Grooming and sextortion: Unhealthy actors construct trusted connections with their little one victims and exploit these relationships to commit abuse. These perpetrators could have sexual, monetary or different motivations.

Disclosure and reporting: Youth navigate every kind of doubtless dangerous conditions on-line. Understanding whether or not they search assist in these conditions and the way is vital to creating instruments and sources to help them.

The info we acquire in every of those areas permits us to watch the general state of the problem. With that information, we together with others within the little one security ecosystem can take the best actions to defend youngsters.

The affect

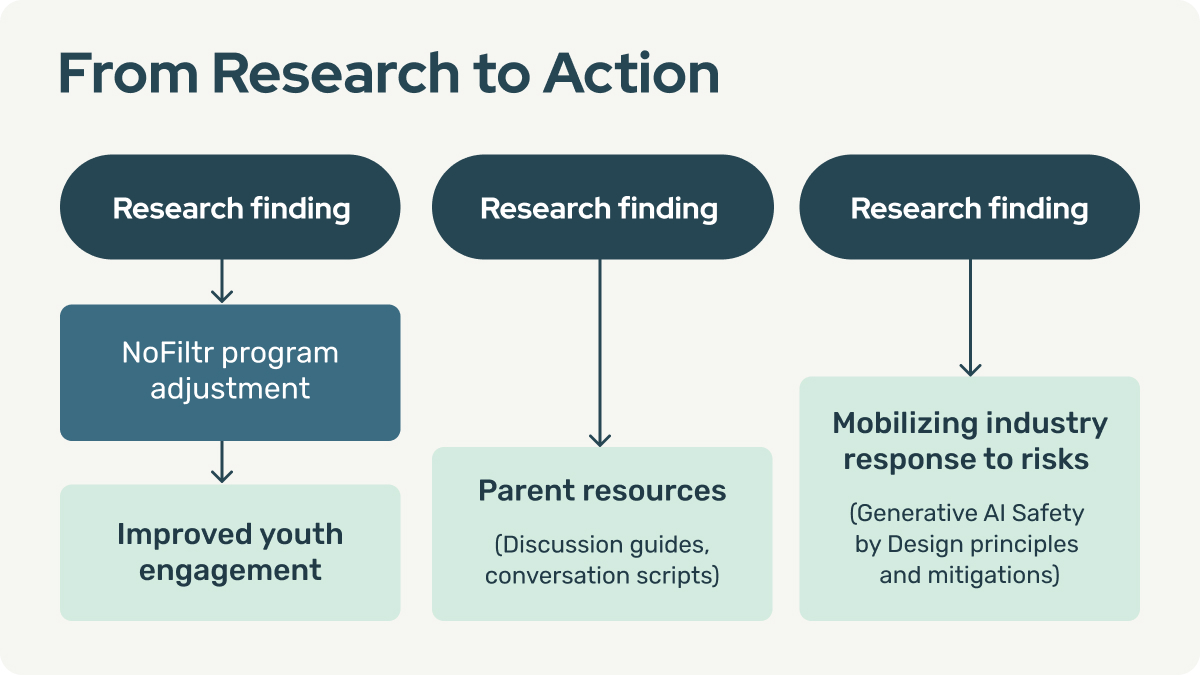

Thorn’s in depth analysis results in tangible enhancements to instruments, applications, and sources which have real-world affect on little one security.

For instance, the insights we collect result in simpler campaigns by way of our NoFiltr youth program. With them, we construction our applications and communications in ways in which higher resonate with youth, of their language and about points that have an effect on their on a regular basis life.

With deeper information on youth behaviors in addition to what mother and father and caregivers grapple with, we additionally develop extra relevant guides for our Thorn for Dad and mom useful resource hub. In 2023, 10,000 mother and father visited the Thorn for Dad and mom website for the following tips.

Extra broadly, our sextortion analysis led to the event of the Cease Sextortion marketing campaign in partnership with Meta. The marketing campaign has been translated into many languages and gives an important useful resource for folks and youths alike.

And we don’t simply monitor present developments; we additionally keep vigilant to rising threats. In an trailblazing collaboration, our analysis on the dangers generative AI poses to little one security introduced pivotal and well timed insights to the broader ecosystem — illuminating the present scale and nature of this potent know-how and the threats of its misuse to youngsters.

Construct your individual information

If you happen to’re interested by getting up to the mark on the dangers youngsters face on-line or how one can assist acknowledge and forestall little one sexual abuse or exploitation, we invite you to discover our Analysis Middle for easy-to-read explanations and sources.

We share our findings publicly to activate our broader group’s consciousness and motion. In any case, it’s as much as all of us to maintain the youngsters in our lives secure from sexual harms.

Embedded in our Thorn for Dad and mom sources are key takeaways on how one can have judgment-free conversations: Begin early. Pay attention typically. Keep away from disgrace.

At Thorn, we heed that steerage by asking youth about their experiences, emotions, and views — whereas conserving our finger on the heartbeat of know-how’s affect on little one security.

On this means, our analysis retains us keep nimble, permitting us to consistently evolve our efforts to safeguard youngsters from these trying to do them hurt.