At Thorn, we’re devoted to constructing know-how to defend youngsters from sexual abuse. Key to this mission is our CSAM detection answer, Safer, which permits content-hosting platforms to search out and report youngster sexual abuse materials (CSAM) on their platforms. In 2023, extra corporations than ever deployed Safer. This shared dedication to youngster security is extraordinary and it helped advance our purpose of constructing a really safer web.

Safer’s neighborhood of consumers spans a variety of industries. But, all of them host content material uploaded by their customers.

Safer empowers their groups to detect, evaluation, and report CSAM at scale. Doing so at scale is essential. It means their content material moderators and Belief and Security groups can discover CSAM amid the thousands and thousands of items of content material uploaded to their platforms on daily basis. This effectivity saves time and hurries up their efforts. Simply as importantly, Safer permits groups to report CSAM to central reporting companies, just like the Nationwide Heart for Lacking & Exploited Kids (NCMEC), which is important for youngster sufferer identification.

Safer’s clients depend on our predictive synthetic intelligence and machine studying fashions and a complete hash database to assist them discover CSAM. With their assist, we’re making strides towards eliminating CSAM from the web.

Extra recordsdata had been processed by way of Safer in every of the final three months of 2023 than in 2019 and 2020 mixed. These inspiring numbers point out a shift in priorities for a lot of corporations as they strengthen their efforts to middle the security of kids and their customers.

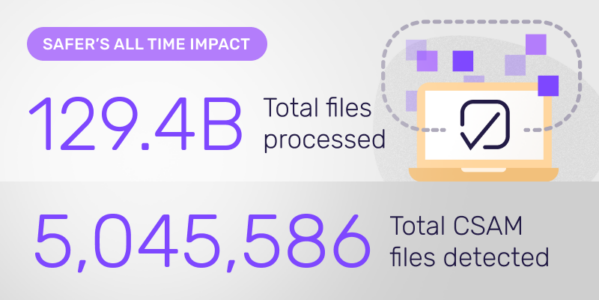

In whole, Safer processed 71.4 billion recordsdata enter by our clients. This 70% improve over 2022 was propelled partly by the addition of 10 new Safer clients. Right now, 50 platforms, with thousands and thousands of customers and huge quantities of content material, comprise the Safer neighborhood, creating a big and ever-growing pressure towards CSAM on-line.

With 57+ million hashes presently, Safer delivers a complete database of hashes to detect CSAM. By way of SaferList, clients can contribute hash values, serving to to decrease the viral unfold of CSAM throughout platforms inside the Safer neighborhood.

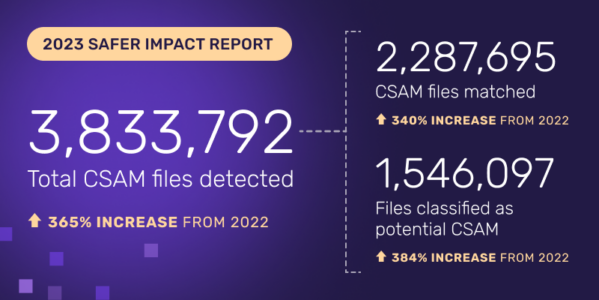

Our clients detected greater than 2,000,000 photographs and movies of recognized CSAM in 2023. This implies Safer matched the recordsdata’ hashes to these in a database of verified CSAM, figuring out them. Hashes are like digital fingerprints and utilizing them permits Safer to programmatically decide if that file has beforehand been verified as CSAM by NCMEC or different NGOs.

Along with detecting recognized CSAM, our classifiers detected greater than 1,500,000 recordsdata of potential unknown CSAM. Safer’s picture and video classifiers use synthetic intelligence to foretell whether or not new content material is prone to be CSAM and flag it for additional evaluation.

Altogether, Safer detected greater than 3,800,000 recordsdata of recognized or potential CSAM, a 365% improve in only a yr, exhibiting each the accelerating scale of the difficulty and the facility of a unified battle towards it.

Final yr continued to spotlight the profound influence of a coordinated strategy to eliminating CSAM on-line. Since 2019, Safer has processed 129.4 billion recordsdata from content-hosting platforms, and of these, detected 5 million CSAM recordsdata. Every year that we stay targeted on this mission helps form a safer web for kids, digital platforms and their customers.

Combating the unfold of CSAM requires a united entrance. Sadly, efforts throughout the tech trade stay inconsistent and people corporations who’re proactive depend on siloed information. Thorn’s Safer answer is right here to vary that.

Initially printed in June 2024 on safer.io