Our capability to add content material to our favourite apps or chat with others on-line is a part of how we socialize with our digital communities. But, these similar options are additionally exploited by dangerous actors to hurt kids — akin to to solicit, create and share little one sexual abuse materials (CSAM) and even sextort kids on-line.

Platforms face an uphill battle making an attempt to fight this misuse of their options. Nevertheless it’s vital they accomplish that. Not solely does on-line little one sexual exploitation put kids and platform customers in danger, however internet hosting CSAM is prohibited for platforms.

That’s why in 2019, Thorn launched our answer Safer to assist content-hosting platforms finish the viral unfold of CSAM and with it, revictimization. Right this moment, we’re excited to announce the subsequent step in that effort: a major enlargement to our capabilities with Safer Predict.

The facility of AI to defend kids

Our core Safer answer, now known as Safer Match, affords a expertise known as hashing-and-matching to detect recognized CSAM — materials that’s been reported however continues to flow into on-line. Thus far, Safer has matched over 3 million CSAM information, serving to platforms cease CSAM’s viral unfold and the revictimization it causes.

With Safer Predict, our efforts go even additional. This AI-driven answer detects new and unreported CSAM pictures and movies, and thus far has labeled almost 2 million information as potential CSAM.

Now, we’re proud to share that Safer Predict now has the flexibility to determine doubtlessly dangerous conversations that embrace or may result in little one sexual exploitation. By leveraging state-of-the-art machine studying fashions, Safer Predict empowers platforms to:

Forged a wider internet for CSAM and little one sexual exploitation detection

Determine text-based harms, together with discussions of sextortion, self-generated CSAM, and potential offline exploitation

Scale detection capabilities effectively

To grasp the influence of Safer Predict’s new capabilities, significantly round textual content, it helps to know the scope of kid sexual exploitation on-line.

The rising problem of on-line little one exploitation

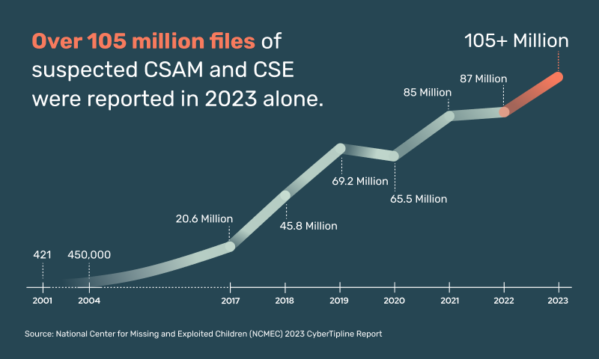

Youngster sexual abuse and exploitation is rising on-line at alarming charges:

And in January 2024, U.S. Senators demanded tech executives take motion in opposition to the devastating rise of kid sexual abuse taking place on their platforms.

The truth is, dangerous actors are misusing social and content-hosting platforms to use kids and to unfold CSAM quicker than ever. Additionally they benefit from new applied sciences like AI to quickly scale their malicious ways.

Hashing-and-matching is vital to tackling this situation, however the expertise doesn’t detect text-based exploitation. Nor does it detect newly generated CSAM, which might characterize a baby in lively abuse. Each textual content and novel CSAM characterize high-stakes conditions — detecting them can present a better alternative for platforms to intervene when an lively abuse state of affairs could also be occurring and report it to NCMEC.

Safer Predict’s predictive AI applied sciences enable platforms to detect these harms occurring on their platform. These efforts can assist uncover info vital to figuring out kids in lively abuse conditions and regulation enforcement’s capability to take away little one victims from hurt.

AI fashions constructed on trusted information

Whereas it appears AI is in every single place as of late, not all fashions are created equally. On the subject of AI, validated information issues, particularly for detecting CSAM and little one sexual exploitation.

Thorn’s machine studying picture and video classification fashions are skilled on confirmed CSAM offered by our trusted companions, together with NCMEC. In distinction, broader moderation instruments designed for numerous sorts of hurt may merely use age recognition information mixed with grownup pornography, which differs drastically from CSAM content material.

Safer Predict’s textual content detection fashions are skilled on messages:

Discussing sextortion

Asking for, transacting in, and sharing CSAM

Asking for a minor’s self-generated sexual content material, in addition to minors discussing their very own self-generated content material

Discussing entry to and sexually harming kids in an offline setting

By coaching Safer Predict’s fashions on confirmed CSAM and actual conversations, the fashions are capable of predict the probability that pictures and movies comprise CSAM and messages that comprise textual content associated to little one sexual exploitation.

Collectively, Safer Match and Safer Predict present platforms with complete detection of CSAM and little one sexual exploitation, which is vital to safeguarding kids — each on-line and off.

Partnering for a safer web

At Thorn, we’re proud to have the world’s largest workforce of engineers and information scientists devoted solely to constructing expertise to fight little one sexual abuse and exploitation on-line. As new threats emerge, our workforce works laborious to react — akin to combating the rise in sextortion threats with Safer Predict’s new text-based detection answer.

The flexibility to detect doubtlessly dangerous messages and conversations between dangerous actors and unsuspecting kids is an enormous leap in our mission to defend kids and create a safer digital world — the place each little one is free to easily be a child.