The Nationwide Heart for Lacking and Exploited Youngsters lately launched its 2023 CyberTipline report, which captures key tendencies from stories submitted by the general public and digital platforms on suspected little one sexual abuse materials and exploitation. At Thorn, these insights are essential to our efforts to defend youngsters from sexual abuse and the progressive applied sciences we construct to take action.

This yr, two clear themes emerged from the report: Little one sexual abuse stays a essentially human situation — one which know-how is making considerably worse. And, modern applied sciences have to be a part of the answer to this disaster in our digital age.

The dimensions of kid sexual abuse continues to develop

In 2023, NCMEC’s CyberTipline acquired a staggering 36.2 million stories of suspected little one sexual exploitation. These stories included greater than 104 million recordsdata of suspected little one sexual abuse materials (CSAM) — pictures and movies that doc the sexual abuse of a kid. These numbers ought to give us rapid pause as a result of they signify actual youngsters enduring horrific abuse. They underscore the pressing want for a complete response.

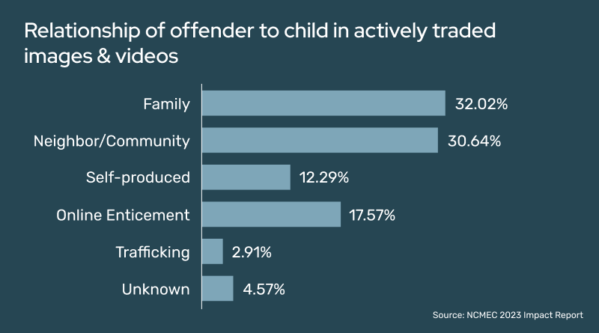

NCMEC’s Impression Report, which was launched earlier this yr, make clear the creators of a lot of this CSAM, together with recordsdata that NCMEC’s Little one Sufferer Identification Program was already conscious of. Sadly, for almost 60% of the youngsters already recognized in distributed CSAM, the abusers have been folks with whom youngsters needs to be secure: Relations and trusted adults throughout the group (similar to academics, coaches, and neighbors) are most definitely to be behind CSAM actively circulating on the web at the moment. These adults exploit their relationships to groom and abuse youngsters of their care. They then share, commerce, or promote the documentation of that abuse in on-line communities, and the unfold of that materials revictimizes the kid time and again.

After all, the astounding scale of kid sexual abuse and exploitation is bigger than these submitted stories. And fashionable applied sciences are answerable for accelerating predators’ skills to commit sexual harms towards youngsters.

This implies, we should meet scale with scale — partially by constructing and using superior applied sciences to hurry up the struggle towards little one sexual abuse from each angle.

Expertise have to be a part of the answer

The effectivity and scalability of know-how can create a robust, optimistic pressure. Thorn has lengthy acknowledged the benefit of AI and machine studying (ML) to establish CSAM. Our Safer Predict resolution makes use of state-of-the-art predictive AI to detect unknown CSAM circulating the web in addition to new CSAM recordsdata being uploaded on a regular basis. It may now additionally detect doubtlessly dangerous conversations — similar to sextortion and solicitation of nudes — occurring between dangerous actors and youngsters, by way of our textual content classifier. These options present important instruments wanted to cease the unfold of CSAM and abuse occurring on-line.

Moreover, the identical AI/ML fashions that detect CSAM on-line additionally dramatically velocity up legislation enforcement efforts in little one sexual abuse investigations. As brokers conduct forensic assessment of mountains of digital proof, classifiers, just like the one in Safer Predict, use AI fashions to automate CSAM detection, saving brokers vital time. This helps legislation enforcement establish little one victims sooner to allow them to take away these youngsters from nightmare conditions.

All throughout the kid security ecosystem, velocity and scale matter. Slicing-edge applied sciences have to be used to foil behaviors that encourage and incentivize abuse and pipelines for CSAM, whereas additionally enhancing prevention and mitigation methods. Expertise can expedite our understanding of rising threats, assist Belief & Security groups in creating safer on-line environments, enhance hotline response actions, and velocity up sufferer identification.

In different phrases, deploying know-how to all sides of the kid security ecosystem will probably be vital if we’re to do something greater than deliver a backyard sprinkler to a forest hearth.

Tech firms play a key position

From 2022 to 2023, stories of kid sexual abuse and exploitation to NCMEC rose by over 4 million. Whereas this improve correlates with rising abuse, it additionally means platforms are doing a greater job detecting and reporting CSAM. This can be a vital a part of the struggle towards on-line exploitation and we applaud the businesses investing in defending youngsters on their providers.

Nonetheless, the fact is, solely a small portion of digital platforms are submitting stories to NCMEC. Each content-hosting platform should acknowledge the position it performs within the little one security ecosystem and the chance it has to guard youngsters and its group of customers.

When platforms detect CSAM and little one sexual exploitation and submit stories to NCMEC, that knowledge can provide insights that may result in little one identification and removing from hurt. That’s why it’s vital for platforms to submit high-quality stories with key person data that helps NCMEC to pinpoint the offense location or the suitable legislation enforcement company.

Moreover, the web’s interconnectedness means abuse doesn’t keep on one platform. Youngsters and dangerous actors transfer throughout areas fluidly — abuse can begin on one platform and proceed on one other. CSAM, too, spreads virally. This implies it’s crucial that tech firms collaborate in progressive methods to fight abuse as a united entrance.

A human situation made worse by rising tech

Final yr, NCMEC acquired 4,700 stories of CSAM or different sexually exploitative content material associated to generative AI. Whereas that appears like lots — and it’s — these stories signify a drop within the bucket in comparison with CSAM produced with out AI.

The rising stories involving gen AI ought to, nonetheless, set off alarm bells. Simply because the web itself accelerated offline and on-line abuse, misuse of AI has the potential for profound threats to little one security. AI applied sciences make it simpler than ever to create content material at scale, together with CSAM, and solicitation and grooming textual content. AI-generated CSAM ranges from AI diversifications of authentic abuse materials to the sexualization of benign content material to completely artificial CSAM, typically educated on CSAM involving actual youngsters.

In different phrases, it’s essential to do not forget that little one sexual abuse stays a essentially human situation, impacting actual youngsters. Generative AI applied sciences within the unsuitable palms facilitate and speed up this hurt.

Within the early days of the web, stories of on-line abuse have been comparatively small. However from 2004 to 2022, they skyrocketed from 450,000 stories to 87 million, aided by digital tech. Throughout that point, collectively, we did not sufficiently deal with the methods know-how might compound and speed up little one sexual abuse.

At present, we all know much more about how little one sexual abuse occurs and the methods know-how is misused to sexually hurt youngsters. We’re higher positioned to scrupulously assess the dangers that generative AI and different rising applied sciences pose to little one security. Every participant within the tech trade has the accountability to make sure youngsters are protected as these purposes are constructed. That’s why we collaborated with All Tech Is Human and main generative AI firms to decide to ideas to forestall the misuse of AI to additional sexual harms towards youngsters.

If we fail to handle the impacts of rising tech with our eyes open, we threat throwing open Pandora’s field.

Collectively, we are able to create a safer world

From monetary sextortion to on-line enticement of kids for sexual acts, the 2023 NCMEC report captures the continued development of disturbing tendencies towards youngsters. Bringing an finish to little one sexual abuse and exploitation would require a pressure of even larger may and scale — one which requires us to see know-how as our ally.

That’s why Thorn continues to construct modern know-how, broaden partnerships throughout the kid security ecosystem, and convey extra tech firms and lawmakers to the desk to empower them to take motion on this situation.

Little one sexual abuse is a problem we can resolve. When the perfect and brightest minds wield highly effective improvements and work collectively, we are able to construct a safer world — one the place each little one is free to easily be a child.